The goal

- The goal is to create a model with PyTorch that takes the content of a file as input and classifies and determines which extension the file probably has.

This is possible with Logistic Regression.

Step 1: Gathering files

- I couldn’t find a place on the WWW that offers a large number of files of each type (e.g. 100 EXE files, 100 ZIP files, …). That’s why I wrote a Python script that searches my hard drive for files.

1 | import os |

- We iterate over all possible extensions we care about and try to find 500 files of each. Of each of the files, we take only the first 100 bytes. This should be enough, because the header information of the file, that usually make it possible to identify its type/extension, are usually located at the beginning of the file.

- Example: A PNG file begins starts with the following 8 bytes:

137 80 78 71 13 10 26 10

- Example: A PNG file begins starts with the following 8 bytes:

- After that, we create a JSON file for each extension and store the bytes in it.

The generated files

- This is how the file output_bytes-exe.txt looks like:

1 | [ |

- This is how the file output_bytes-gif.txt looks like (it contains a few duplicates):

1 | [ |

Step 2: Creating a model

Imports

1 | import numpy as np |

Constants

- These constants will be used later.

- The

input_sizewill decide, how many of the first 100 bytes we will use to train the model. We’ll use only the first 25 bytes.

1 | file_extensions = ["pdf", "png", "jpg", "htm", "txt", "mp3", "exe", "zip", "gif", "xml", "json", "cs"] |

A helper function

- This is a helper function that generates an accuracy.

- That value is not needed by the model, but allows us to know how many of the predictions were correct.

1 | def accuracy(outputs, labels): |

The model itself

- We create a model with 4 layers. The first layer has 25 inputs for the 25 first bytes of the file. The 2nd layer has also 25 inputs. The next 2 layers each have 16 inputs.

- The number of outputs of the last layer is the number of possible file extensions we consider.

- The model uses the

accuracyfunction from above to provide us additional information during the training. - We are using cross entropy for the loss calculation and softmax to get numbers that sum up to 1 in the end.

1 | class FileClassifierModel(nn.Module): |

Step 3: Loading the data and preparing them for PyTorch

- We make sure that there are training data and validation data.

- We need to somehow provide the model expected output (

targetvalues). We do this by creating an array of only zeros (a zero for each possible file type) and setting the first number to 1 in case of pdf, the seconds number to 1 in case of png, …

1 | input_array = [] |

Step 4: Helper functions to train

1 | def evaluate(model, val_loader): |

- Passing the evaluation result to

epoch_end, where we print it…

Step 5: Training

execution

1 | model = FileClassifierModel() |

The output

1 | 0 {'val_loss': 8.624412536621094, 'val_acc': 0.10610464960336685} |

- We can see, that the achieved accuracy (how many files are classified correctly) of our evaluation data is around 90%.

Step 6: Evaluation with files from the WWW

- Let’s try out the model with a few files from the WWW…

1 | import urllib |

Output

1 |

|

Analysis

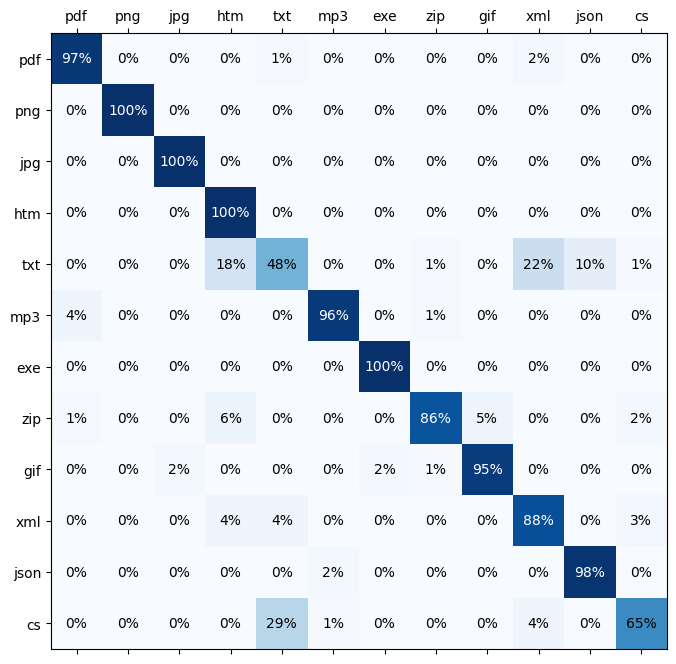

(Mis)classification matrix

- Let’s generate a matrix that tells us how each of the byte inputs is classified…

1 | import numpy as np |

- Explanation

- The value on the left stands for the correct/actual extension.

- The value at the top stands for the prediction of the model.

- first line: From all pdf files, 97% were classified as PDF, 1% as TXT and 2% as XML.

- We can see that the major misclassified extensions are CS (as TXT) and TXT (as XML or HTM).

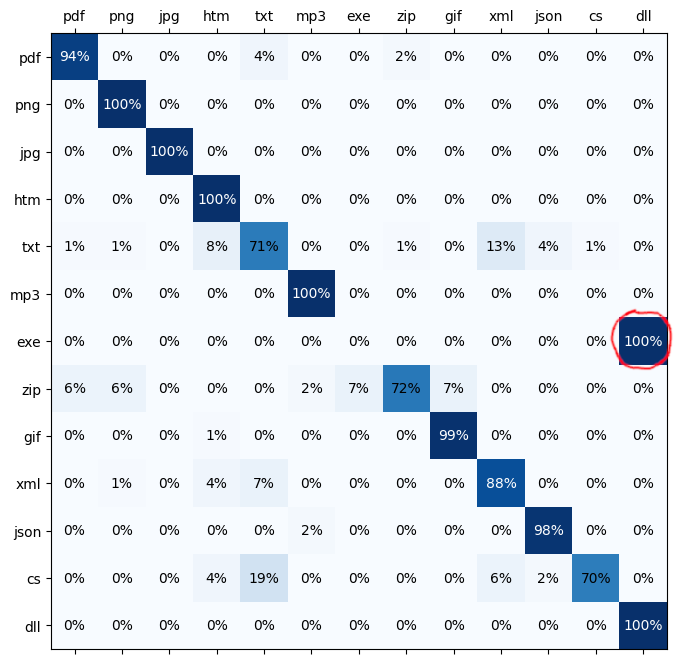

exe and dll

- Whether the input file is an exe file or a dll file: The model tends to predict either DLL or EXE with each around 0.5 (50%) probability. It seems that the exe and dll files from my hard drive do not seem to be easily distinguishable.

- In this figure, we can see a misclassification that can be reliably observed when EXE and DLL are both involved:

cs

- CS files are C# source code files, but the model classifies some of them as TXT. Why is that?

- We consider only the first 25 bytes of the file.

- The cs file from the web started with

namespace HelloWorldand does not contain anyusingdirectives at the beginning.- But the training files on my hard drive usually contain lots of usings. Therefore, the model has not had the opportunity to learn other ways a C# file might appear (e.g. without usings, with a block scoped namespace, a file scoped namespace, a comment block, many line breaks, …)

Mentionable

Mistake

- Making mistakes is sometimes useful. Instead of passing the bytes 0 - 24 to the model for training, I once passed the bytes 75 - 99 to it (the last 25 of the 100 first bytes, not the first 25), because of a mistake in the Python syntax.

- The loss value stayed pretty high and of course, that makes sense, especially for many binary files, because the most relevant header information bytes that indicate the file type are usually placed right at the beginning of a file.

Input size

- I started with an input size of 100 bytes, but it turned out that the first 25 bytes were (on average, this is maybe not true for a cs file, see above) considerably more important than the bytes 25 - 99.

- Decreasing the input size to 25 bytes made the training much faster.

Further improvements

- The result can be further improved by:

- using more training data

- using more diverse training data

- eliminating duplicated training files

- considering more than just the first 25 bytes

- using more advanced machine learning techniques

- Google developed a tool called magika, which seems to be a lot better, but they have probably used more training data from people who are unaware of it. 😉